Artificial intelligence is proving increasingly critical for tapping into data acquired by the exploding volume and diversity of sensors at the tactical edge – as well as to power emerging applications that rely on this data. The fact is that the 21st-century battlefield – a core DoD focus in 2020 – does not suffer from a shortage of sensors spread across soldier wearables, vehicles, drones, video cameras, spectrum, signal and radio sensors, cyber sensors and scores of other devices that comprise the Internet of Battlefield Things.

More sensors mean more data – too much data – which limits the DoD’s ability to turn that information into actionable intelligence in a timely fashion. But AI is poised to change that equation by shifting the burden from human to machine so that only the most relevant and timely data reaches those who need it.

Most data-driven AI applications are made possible by innovations in sensor technologies that can produce exorbitant amounts of data through functions that extend beyond simple motion-detection and similar binary functions. Because of this, and with the increasing availability of inexpensive wireless long-range sensors and numerous sensor-laden autonomous systems, the future battlefield will be covered with sensors at an unprecedented scale.

As 2020 progresses, expect to see more of these historical sensor data challenges addressed, driving AI-enabled applications to the tactical edge in several key areas:

Video processing with analytics, object and threat detection

DoD invests significant manpower in monitoring data feeds – whether it’s drone video or health information from soldier wearables – for specific objects, events, threats and anomalies. Making use of this sensor-driven data typically requires a level of manpower that is not financially or operationally feasible. Efforts are underway to reduce the manual component for this type of monitoring. For example, DoD’s Project Maven has leveraged Google’s TensorFlow AI systems to analyze U.S. drone footage, detect objects of note, and then pass them on to analysts.

Automated cyber security operations

The growing volume and sophistication of cyber threats can challenge the ability to respond quickly or even pre-empt attacks. To maintain an edge over adversaries, tapping AI to automate cyber security operations is critical.

For example, last year the Naval Information Warfare Systems Command announced an AI cybersecurity challenge to automate cybersecurity operations using AI and machine learning. The hack-a-thon was responding to the growing volume of cybersecurity-related data generation, and role AI and machine learning can play in tapping this data for real-time threat response.

Sensor fusion, soldier health monitoring, and augmented reality

Col. Jerome Buller, who leads the U.S. Army Institute of Surgical Research, recently spoke on his vision for how artificial intelligence, machine learning, sensors and vision equipment could direct medics in the battlefield to those wounded soldiers they could help most. Buller noted how biometric data from soldiers’ wearablescan help medics make more informed, life-saving decisions even before touching down in chaotic warzones.

EW signal processing and signal intelligence

Electronic warfare attacks have the full attention of the U.S. military, which continues to enhance its EW software and hardware for offensive and defensive operations. However, like other types of sensors, EW sensors can generate a lot of false signals, or noise. In order for the DoD to make sense of the data and convert it to actionable information, DoD is looking for AI to filter noise and classified signals, reducing the warfighter “cognitive load” when it comes to signal detection. Recent experiments and prototypes by the Army’s Rapid Capabilities and Critical Technologies Office are showing promise toward full fielding.

Equipment predictive maintenance

Military vehicle and equipment breakdowns not only have major cost implications, but more importantly they threaten soldier safety. While it will always be a challenge to eliminate the unexpected, sensor-laden equipment that provides a steady stream of real-time data to decision makers and soldiers will get us closer to zero injuries. AI is a big reason why.

In recent years we’ve seen programs, such as the one initiated by the U.S. Army, which uses AI to improve combat readiness for its fleet of Bradley tanks, to gain a real-time snapshot of equipment health to guide maintenance decisions.

Battlefield situational awareness and decision support

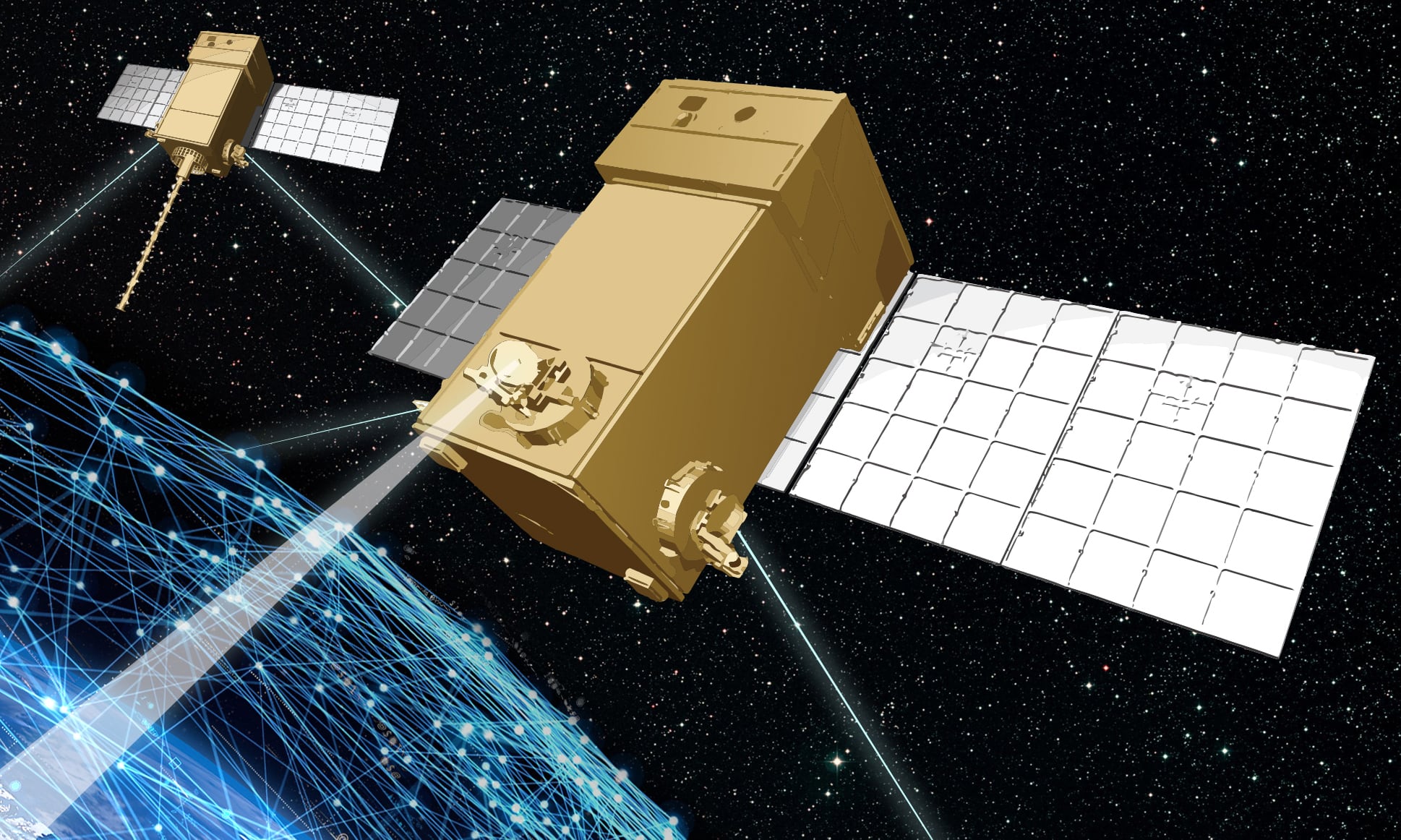

In December, the Air Force, Navy and Army conducted the first field test of the Advanced Battle Management Systems. ABMS was designed to connect technology across military services to better address increasingly sophisticated adversarial threats. The ABMS field test, which linked communications and sensor data collected by Air Force and Navy fighters, a naval destroyer, and an Army unit, is part of a new warfighting concept that envisions coordinated combat that spans the five warfare domains: land, sea, air, space, and cyberspace.

Key to this new concept, referred to as Joint All-Domain Command & Control (JADC2), is the

real-time collection, analysis and sharing of data, as well as leveraging AI to help ensure the right data gets to the right forces for effective situational awareness and decision support.

The tactical use cases for sensor data are there. What’s needed is emerging technologies such as AI to help military leaders and warfighters make sense of that data.

Charlie Kawasaki is the chief technical officer at PacStar.