Have you ever witnessed two people talking past each other? They seem to be discussing the same topic using the same language, but you begin to wonder if they are actually talking about two different things. The public debate about the use of artificial intelligence in the Department of Defense is beginning to feel that way to me.

Some technologists have called for DoD AI ethics, but in the next breath they call for an end to programs that have never been demonstrated to be unethical. What gives?

I recently completed a study examining ethics across all scientific disciplines, and my team identified 10 ethical principles that span disciplines and international borders. With this foundation, I believe what advocates want from DoD is actually a code of conduct for how DoD will use AI: a set of rules and guidelines that the government will hold itself accountable to adhering to and that would allow technology developers and researchers to know how their work will be operationalized.

Since 1948, all members of the United Nations have been expected to uphold the Universal Declaration of Human Rights, which protects individual privacy (article 12), prohibits discrimination (articles 7 and 23), and provides other protections that could broadly be referred to as civil liberties. Then, in 1949, the Geneva Convention was ratified, and it created a legal framework for military activities and operations. It says that weapons and methods of warfare must not “cause superfluous injury or unnecessary suffering” (article 35), and “in the conduct of military operations, constant care shall be taken to spare the civilian population, civilians and civilian objects” (article 57).

Therefore, if someone’s particular concern with DoD is its ethics of AI — such as in the imagery-analysis program Project Maven — then this person can feel confident that ethical principles already exist. If instead this person is concerned about whether he or she can trust that DoD will adhere to ethics, then creating new ethics on paper will hardly help. And those who demand the prohibition of AI in autonomous weapons altogether might see their goal realized too, since this topic seems unlikely to disappear from international debate.

So here we are, stuck in the middle of a discussion in which both sides may not actually be talking about creating new ethics, but rather discussing a code of conduct — a set of rules and guidelines for how AI will be used and monitoring and oversight mechanisms to ensure it is being adhered to.

Here is one path for how developing a code of conduct might begin:

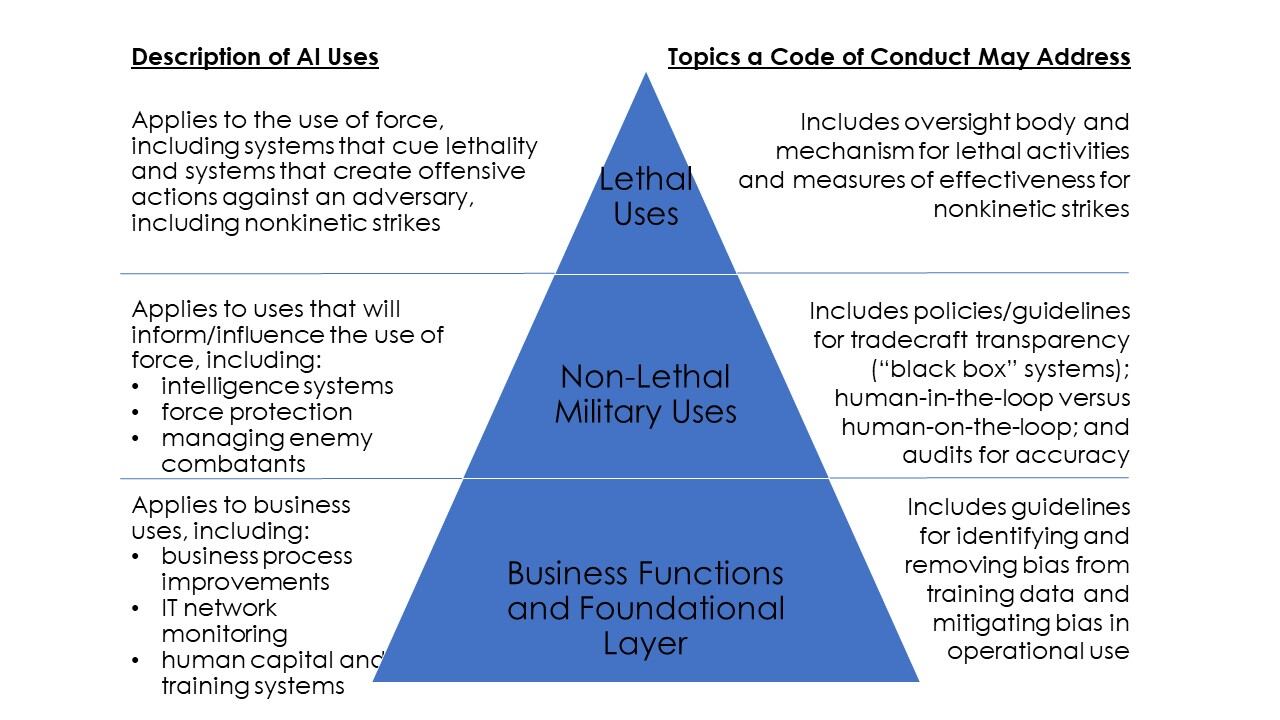

DoD could identify areas where it may use AI in the foreseeable future and begin with three categories for such uses, shown in the figure below. The bottom-most foundational layer is for all applications of AI (including business functions that would be found in any large organization); the next layer is for non-lethal military applications (such as intelligence systems, like Project Maven); and the top layer, with the greatest number of rules and oversight, is for applications that result in lethality (either directly or indirectly). Each layer would have its own rules and guidelines for AI, and the activities in each layer would have to adhere to the rules and guidelines of every layer below it in the pyramid.

To pursue this idea, DoD could bring together internal experts who know the department’s missions, programs and processes alongside external experts who advocate for vulnerable populations and oversight to develop a code of conduct for each layer.

Such a working group could be responsible for creating guidelines for the bottom-most, foundational layer that would apply to all AI uses across DoD. These applications could include IT systems that monitor for cyber intrusion or insider threat; human capital systems that assist with recruitment or reviewing resumes and job applications; and financial systems that conduct autonomous financial accounting or transactions. The working group could address topics that apply broadly across all uses of AI, such as how to mitigate bias in training data or how to ensure that training data is itself ethical.

This working group — or perhaps a second group working in parallel — could address the middle layer of specialized, nonlethal, military uses of AI and develop policies or guidelines for their use. These applications of AI could include intelligence collection and analysis systems; systems that conduct force protection around military bases and installations; systems that manage detention facilities for enemy combatants; and so on. Such uses for AI would need all of the same guidelines established in the foundational layer, but they might also need additional guidelines or policies for issues such as whether “black-box” systems will be allowed or whether explainable AI will be required; how to value “human-in-the-loop” versus “human-on-the-loop”; and how these systems will be audited to ensure they are meeting expectations.

Lastly, any use of AI that might lead to a lethal result would require the greatest level of oversight of all DoD’s AI systems. This top layer could include systems that are weaponized (even if the weapon itself is not initiated by AI) and systems that may have lethal outcomes (such as cyber tools that may result in lethal effects). One of the goals of a working group overseeing this top layer may be the creation of an oversight body that includes members from outside of the executive branch, including from the legislative branch and from nongovernmental organizations (such as civil liberties advocates and experts from academia). The working group could create policies to dictate how often programs are reviewed by this oversight body, which milestones trigger a review, and so on.

This proposal is intended to motivate further discussion. Many questions would need to be answered. Is supply chain management a foundational layer business function, or do threats from counterintelligence and foreign interference elevate it to the intermediate layer? Do existing combat rules of engagement need to change for AI, or are current oversight mechanisms for the lethal layer already sufficient? Would any rules or guidelines that are created for DoD apply to the CIA, the State Department, the FBI and other departments and agencies?

For as long as society has achieved technology advancements, people have sought ways to weaponize or militarize them. Democratic societies can make decisions that are representative of their citizens’ values and stand up to public scrutiny. It is these values that distinguish the U.S. from its adversaries. The Defense Innovation Board’s public listening sessions is an example of the department demonstrating these values, and the National Security Commission on Artificial Intelligence has an opportunity to create whole of government leadership across departments. In order to advance these discussions effectively, the U.S. government must frame the militarization of AI in a manner that reflects the values of its stakeholders and of American society.

Cortney Weinbaum is a management scientist specializing in intelligence topics at the nonprofit, nonpartisan RAND Corporation.