The U.S. Army is working to improve its network at a rapid pace, increasing bandwidth, lowering latency and making it more robust for the future fight. So why does it want to send less data over that network?

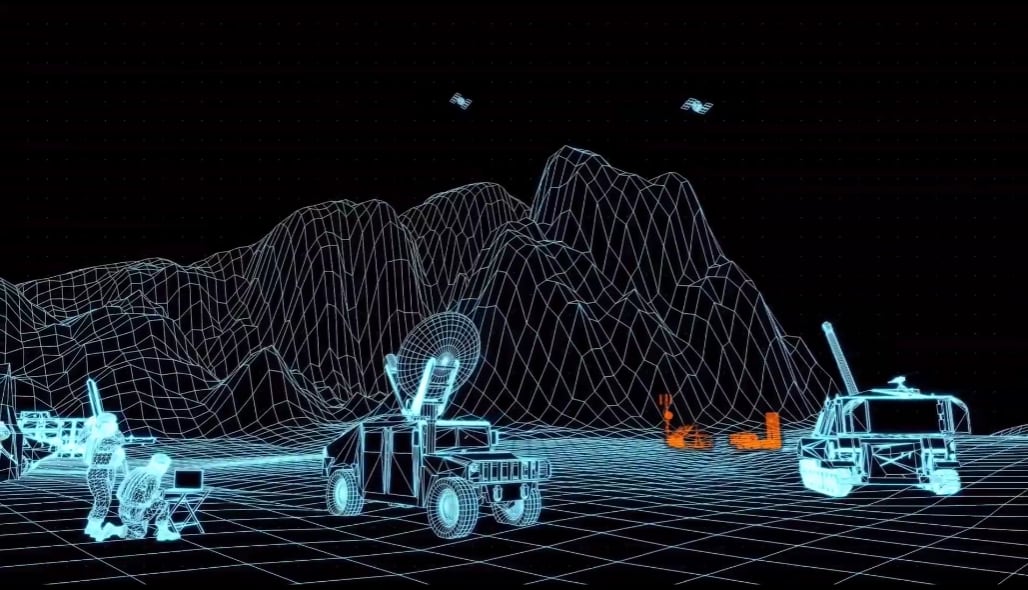

At the virtual Association of the U.S. Army conference this week, officials emphasized that in order to get sensor data to weapons systems even faster, they need to push computing to the edge. And to hit deep-lying, protected targets, the Army needs to see farther to sense potential threats, create targeting data and send a solution to the best fires system for rapid response.

The concept is more frequently referred to as the sensor-to-shooter timeline, and the Army is putting vast amounts of effort into shortening that timeline as much as possible so it can respond to threats effectively. Part of that effort will involve shifting the processing step from the command post to the sensor, Army officials said.

This is known as edge processing.

“Edge processing is something that we’re very interested in for a number of reasons. And what I mean by that is having smart sensors that can not only detect the enemy, [but] identify, characterize and locate, and do all those tasks at the sensor processing,” said John Strycula, the director of the Army’s task force focused on intelligence, surveillance and reconnaissance.

Processing at the sensor provides two major benefits. First, artificial intelligence will process that data faster than a human could. Second, if data is processed at the edge, the sensor doesn’t have to take up massive bandwidth to send all of the raw data it’s collecting back through the network; it just needs to send its final product.

“If I only have to send back a simple message from the sensor that says the target is here ― here’s the location and here’s what I saw and here’s my percent confidence ― versus sending back the whole image across the network, it reduces those bandwidth requirements,” Strycula said.

“We are trading network for compute in a lot of areas, and what I mean by that is we are adding compute to places it was never meant to be ― never envisioned to be ― such as on the sensor itself or on the platform co-located with the sensor,” said Alexander Miller, senior G-2 science and technology adviser. “By trading that compute for the networking time, we don’t have to leverage so much of the network to move those data.”

But that trade-off involves risk, Miller noted. Commanders and operators won’t get the full picture they might receive from more raw data. That means they will need to trust the AI systems to send them the accurate data they need.

The Army was able to demonstrate some of those burgeoning artificial intelligence capabilities at Project Convergence, the service’s campaign of learning to integrate new AI, sensing and network capabilities, in September.

Take the Dead Center payload, for example. Once installed on a Gray Eagle drone, the AI system processed all of the sensor data collected by the drone. Rather than having the Gray Eagle send that raw data to a command post for processing, only to send it back to the drone, Dead Center was able to automatically detect threats and create targeting data.

“One of the key takeaways [of Project Convergence] is we need to get out of the business of just pushing data,” said Douglas Matty, director of AI capabilities at Army Futures Command.

The Tactical Intelligence Targeting Access Node, or TITAN — a new scalable, portable ground station under development — and its AI counterpart, Prometheus, are being built to perform a similar task. At Project Convergence, a TITAN surrogate was able to take imagery from satellites, turn it into targeting data and then send that solution over satellite communications to operators. That process drastically reduced the amount of bandwidth used in the sensor-to-shooter chain.

Using AI at Project Convergence, the Army was able to reduce the sensor-to-shooter timeline from 20 minutes to 20 seconds.

“What we realized there is that with the advances in what some call algorithmic warfare, you no longer have to push all of the data to all of the nodes,” Matty explained. “You can process and have requisite compute and storage at location to handle the data at point of need, and then share that information to facilitate the collaboration.”

Nathan Strout covers space, unmanned and intelligence systems for C4ISRNET.